As mentioned on the Trezor blog post, their latest security patch fixes a flaw I disclosed to them in Jan 2019. This flaw meant an attacker with physical access to the wallet can find the recovery seed stored in FLASH, and leave no evidence of tampering.

This work was heavily inspired by the wallet.fail disclosure – I’m directly dumping FLASH instead of forcing the flash erase then dumping from SRAM, so the results are the same but with a different path. It also has the same limitations – if you used a password protected recovery seed you can’t dump that.

Practically, it has the advantage of not requiring you to modify/tamper with the enclosure at all either to do the fault injection or read data off . Wallet.fail uses the physical JTAG connection, whereas I’m using the USB connection. But by comparison the wallet.fail attack is more robust and easier to apply – my EMFI attack can be fiddly to setup, and there is some inherent timing jitter due to the USB stack processing. Without further effort to speed up my attack, it’s likely the process of opening the enclosure & applying the wallet.fail method would be a faster in practice.

Please do not contact me if you have a “forgotten password” or something similar with your Trezor. I’m not willing to perform this work under any circumstances.

Summary

USB requests that read from memory (such as a USB descriptor read) accept a maximum packet size that is MIN(requested_size, size_of_data_structure). The code as written defaults to accepting the requested size, and overwriting it with a smaller “valid” value.

Using fault injection (here demo’d with EMFI), this check can be skipped. The device will accept up to 0xFFFF as a size argument, giving an attacker access to read large chunks of either SRAM or FLASH (depending where the descriptor is stored).

Due to the FLASH memory layout, the sensitive metadata lies roughly AFTER the descriptors stored in bootloader flash space. Thus an attacker can read out the metadata structure from memory (seed etc). Notably this does not require one to open the enclosure due to use of EMFI.

Using voltage glitching would almost certainly work as well, but likely requires opening the enclosure making tampering more evident (possibly it would work via USB +5V input even which wouldn’t require opening the enclosure).

Details

I’ve chosen to demo this with WinUSB descriptor requests, as they have a very simple structure. In addition the WinUSB has one descriptor in FLASH and one in SRAM, giving you the ability to chose which memory segment to dump. Other USB requests should work too, but I haven’t tested them.

Consider the following function, where the critical calls are the len = MIN(len,…) calls (two of them can be found):

static int winusb_control_vendor_request(usbd_device *usbd_dev,

struct usb_setup_data *req,

uint8_t **buf, uint16_t *len,

usbd_control_complete_callback* complete) {

(void)complete;

(void)usbd_dev;

if (req->bRequest != WINUSB_MS_VENDOR_CODE) {

return USBD_REQ_NEXT_CALLBACK;

}

int status = USBD_REQ_NOTSUPP;

if (((req->bmRequestType & USB_REQ_TYPE_RECIPIENT) == USB_REQ_TYPE_DEVICE) &&

(req->wIndex == WINUSB_REQ_GET_COMPATIBLE_ID_FEATURE_DESCRIPTOR)) {

*buf = (uint8_t*)(&winusb_wcid);

*len = MIN(*len, winusb_wcid.header.dwLength);

status = USBD_REQ_HANDLED;

} else if (((req->bmRequestType & USB_REQ_TYPE_RECIPIENT) == USB_REQ_TYPE_INTERFACE) &&

(req->wIndex == WINUSB_REQ_GET_EXTENDED_PROPERTIES_OS_FEATURE_DESCRIPTOR) &&

(usb_descriptor_index(req->wValue) == winusb_wcid.functions[0].bInterfaceNumber)) {

*buf = (uint8_t*)(&guid);

*len = MIN(*len, guid.header.dwLength);

status = USBD_REQ_HANDLED;

} else {

status = USBD_REQ_NOTSUPP;

}

return status;

} We can see from disassembly this is a simple comparison to the maximum size. I’m mostly going to be looking at the guid structure since it’s located in FLASH below the metadata. Glitching the code in the red box below would create our required vulnerability:

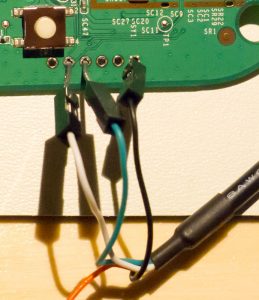

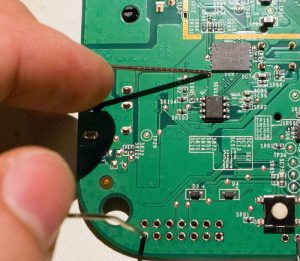

The glitch is inserted with a ChipSHOUTER EMFI tool. The Trezor is held in bootloader mode with two spacers on the buttons, and the EMFI tool is positioned above the case, as shown below:

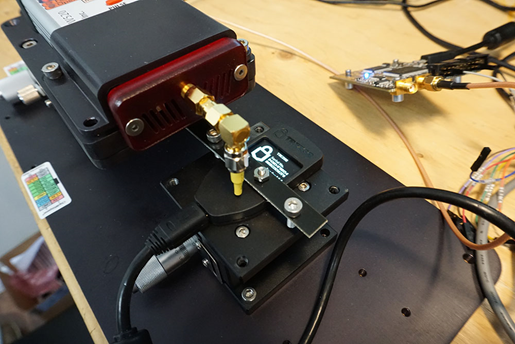

The entire setup is shown below, which has the ChipSHOUTER (bottom left), a ChipWhisperer-Lite (top left) for trigger delay, a Beagle 480 for USB sniffing/triggering (top center) and a switchable USB hub to power-cycle the Trezor when the EMFI triggers the various hard fault/stack smashing/memory corruption errors (top right).

The forced bootloader entry mode simplifies the glitch, as we can freely power cycle the device and try many glitches.

A glitch is timed based on a Total Phase USB analyzer. The USB request is sent from a host computer as shown below:

def get_winusb(dev, scope):

"""WinUSB Request is most useful for glitch attack"""

scope.io.glitch_lp = True #Enable glitch (actual trigger comes from Total Phase USB Analyzer)

scope.arm()

resp = dev.ctrl_transfer(int('11000001', 2), ord('!'), 0x0, 0x05, 0xFFFF, timeout=1)

resp = list(resp)

scope.io.glitch_lp = False #Disable glitch

return respThis corresponds to reading the “guid”. The Beagle480 is setup to generate a trigger on the first two bytes of this request:

To confirm the timing, I also compiled my own bootloader, and saw the sensitive operation was typically occurring in the 4.2 to 5.7uS after the Beagle480 trigger. There was some jitter due to the USB stack processing.

A ChipWhisperer-Lite is used to then generate a 4.4uS offset (empirically a good “waiting” place), after which an EMFI pulse is triggered. Should the 4.4uS offset happen to line up with the length checking/limiting, we can get a successful glitch.

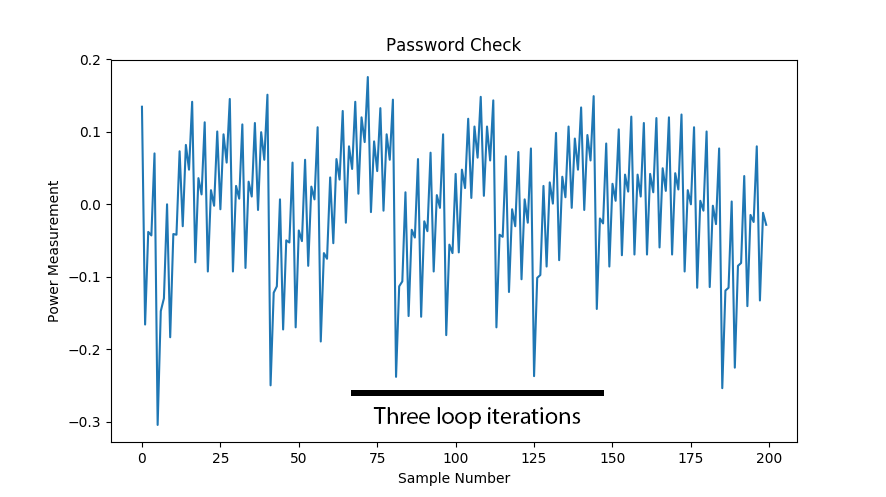

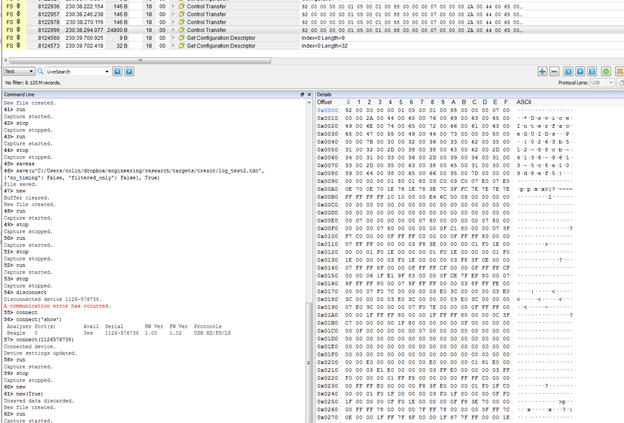

A successful glitch will return much more data than expected – possibly up to the full 0xFFFF, but because things are getting corrupted it sometimes seems to be less. Also some OSes limited the maximum size you can request -you can find some references to that on the libusb mailing list. As a first step you might just try 0x1FF or something (anything larger than the correct 0x92 response). But here is a screen-shot showing three correct transfers (146 bytes transferred) followed by an incorrect one, which dumped 24800 bytes:

Here is an example metadata from another dump (this doesn’t correspond to the screen-shot above), note I never set a PIN as I had damaged the screen since the first device I tested with was being used for development as well:

Simulating the Glitch

Note you can “simulate” this glitch by simply commenting out the length checking operation in the WinUSB request. This allows you to confirm that should that operation be skipped, you can dump large chunks of memory including the metadata.

This simulation could be useful in validating some of the proposed fixes as well (I would highly suggest doing such testing as part of regular building).

Reliability Notes

Note due to the timing jitter the glitch is very unreliable (<0.1% success rate), however this typically translates to taking a few hours due to how quickly the search can be done. So it remains extremely practical since it seems reasonable for an attacker to have access to a wallet for a few hours.

I suspect this could be improved by doing the USB request from an embedded system (something like a GreatFET), which would allow me to synchronize a device to the internal state of the Trezor. This would mean the operation would be:

- Send a USB request (get descriptor etc).

- After the USB request comes back, send our WinUSB/glitch target request at a very defined amount of time after we received the USB request.

- Insert the glitch.

Simple Fixes / Countermeasures

1) The low-level USB functions should accept some absolute maximum size. For example, if you only ever do 64-byte transfers, never allow a larger transfer to occur by masking upper bits to force them to zero. Do this in multiple spots to make glitching past this more difficult.

2) The metadata needs to be guarded by invalid memory segments. Doing a read from a valid segment to the metadata should hit an invalid segment, causing an exception.

3) Move descriptor /metadata arrangement (less useful than #2 in practice).

Conclusions

EMFI is a simple way of abusing the USB stack in the Trezor. You’ll find a similar vulnerability in a number of USB stacks, meaning this type of attack should be something you validate against in your own devices.

More details of this example will be available in the book Jasper & I are working on for (eventual?) release.